Healthcare digital transformation challenges: Can we enable healthcare systems to trust their data?

|

|

At SciBite, we are passionate about enabling organizations to make full use of their data to help them make evidence-based decisions, especially to help organizations overcome their healthcare digital transformation challenges. To support organizations on this journey, we offer a suite of products to help organizations adopt FAIR data standards.

For example, City of Hope, a healthcare organization with more than 35 locations in Southern California and additional facilities in Arizona, Illinois and Georgia recently made use of SciBite’s award-winning Named Entity Recognition (NER) tool, TERMite, to normalize their data within POSEIDON (Precision Oncology Software Environment Interoperable Data Ontologies Network) their secure, cloud-based Oncology Analytics and Insights platform developed on DNAnexus®.

What is the problem?

Today, most healthcare organization executives do not fully trust their own data as shown in InterSystems recent 2021 study. The reasons why executives do not trust their data is due to issues surrounding three key areas: data quality, interoperability, and data accessibility.

Focusing on poor data quality, one of the largest issues leading to poor data quality is due to the lack of normalization of the data. To explain what we mean by normalization, let us take two medication records for acetaminophen that could be built in an EMR (Electronic Medical Record).

These medication records could be named differently to indicate a distinct difference in how the medication is billed to the patient. One might be named, “Paracetamol”, while another is named “Acetaminophen”. If a user were to search to find all patients taking “Acetaminophen”, they would miss all the patients taking generic paracetamol; since the system does not recognize these are two synonyms representing the same thing/entity.

This core problem can be seen not only with just medications, but also across multiple domains in healthcare including, but not limited to, the following:

- Medications

- Lab Results

- Diagnoses

- Genomic Data

Why are these issues a major problem? What are the real-world effects?

At the executive level, the tangible financial impact of poor data quality is apparent. To make evidence-based, data-driven decisions, quality data is required. To understate the impact of data quality, in 2019, an independent European Commission released a comprehensive report that analyzed the financial impact of not having FAIR research data. They found that not having FAIR data could result in a minimum of €10.2bn in lost expenditures per year in the European economy.

Further to the financial impact, poor data quality can lead to the following negative impacts for organizations (among other consequences):

- The inability to make timely decisions.

- Challenges meeting or exceeding quality measures including re-admission rates, etc.

- Lost time for researchers when gathering data.

- Lack of trust in the data by all parties involved.

- Duplicative work was undertaken due to the lack of trust in the data.

Poor data quality manifests downstream throughout an organization. If executives do not fully trust the data, it is impossible to use this data to drive any decision-making at an organization. Ultimately, this lack of trust will eventually extend to all users of the organization, including doctors, nurses, clinicians, and administrative staff.

As a result, if doctors and nurses do not trust the data, any clinical insights that can be gathered by the data will not be implemented in clinical practice. This can include not choosing the correct therapy for a patient provided by Health economics and outcomes research (HEOR) or not trusting nursing impact metrics showing that one nurse is not giving medication as efficiently as they could. Additionally, if the data is not trusted, duplicative work could be undertaken to confirm conclusions.

Similarly, for researchers, the issue makes it next to impossible to efficiently find all participants that might qualify for a particular study. Studies typically follow the following process:

- Researchers define the parameters for a particular study in accordance with their idea.

- Researcher defines the study population criteria and gathers funding from various sources.

- They do this by going to an institutional review board (IRB) and proposing their study purpose.

- In this review process, the IRB defines with the researcher the specific criteria for a patient participant.

- Researcher finds enough patients that meet the strict criteria of the study.

- If they do not find enough patients, the study is dead on arrival.

- Study continues and researcher completes study.

As we know, the participant criteria set by the researcher and the institutional review board are quite complicated. With poor data quality, the tools available to find enough participants are largely insufficient and could result in researchers waiting up to several years to just start their study.

How can healthcare organizations solve this problem?

To summarize, so far, we have seen that there is both a substantial financial and practical incentive to adopt FAIR data standards to overcome healthcare digital transformation challenges.

To gain the benefits we have mentioned, healthcare organizations need to make their data FAIR . The first step to making their data FAIR is normalizing their data. So, the question becomes the following: How can organizations normalize their data for all consumers of that data?

Let us take our previous medication record example – Paracetamol vs. Acetaminophen. To normalize that specific data, an organization would need to agree on a standard term to describe that medication. For this example, let us say that the standard term is Paracetamol. From there, whenever that term (Paracetamol) or a synonym (Acetaminophen) of that entity is administered to a patient, we can then align the data to the standard that was decided previously.

If we expand this example to generic data domains, to normalize all data in a certain data domain (medications, diagnoses, etc.), organizations first need to agree on standard vocabularies or languages in those different data domains. They need to manage these vocabularies across their organization for each domain. As an example, Public standards for the domains mentioned previously in this post are shown below:

- Medication data -MeSH, MEdDRA, IDMP

- Diagnoses – ICD-9-CM, ICD-10-CM, SNOMED

- Lab data – LOINC

- Genomic data – Gene Ontology

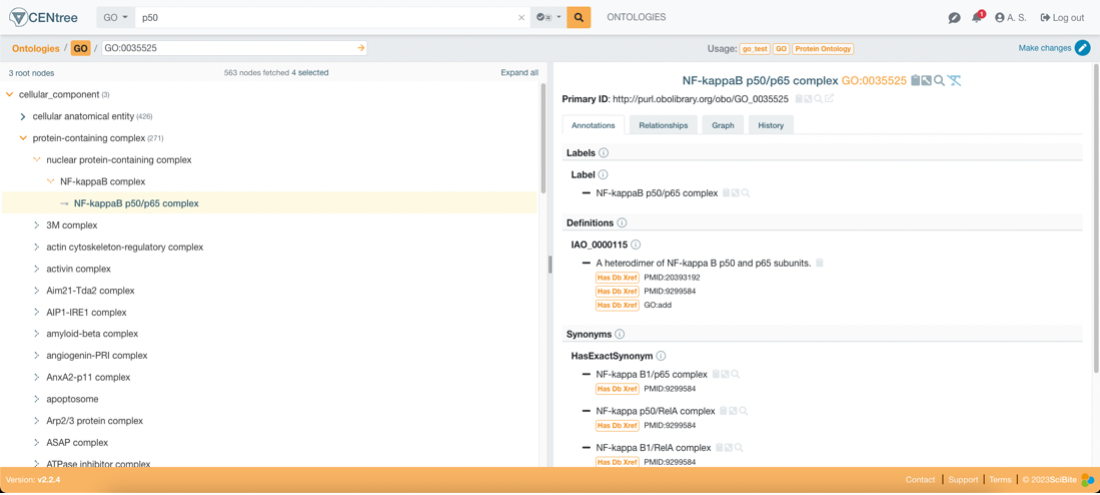

Using CENtree, SciBite’s award-winning ontology management platform, organizations can manage these standards. CENtree enables users to participate in a democratized management process to ensure the vocabularies are up to date. Below is an image of our MeSH SC ontology loaded in CENtree:

Once these standards are agreed upon and managed across the organization, they can be used to normalize data by annotating semantic information from patient records. TERMite, SciBite’s award-winning named entity recognition (NER) engine facilitates the extraction of these terms from records and aligns them to the vocabularies defined previously. TERMite is powered by our companion product VOCabs – VOCabs are ontology or standard derived dictionaries that allow for high precision and recall entity extraction and annotation. VOCabs can be managed and augmented by CENtree to specialize them for specific use cases.

Importantly, in relation to interoperability, all SciBite products, including CENtree and TERMite, are built API first, which enables organizations to integrate them with their production workflows simply. Extensive API documentation, including Swagger documentation, is available to ensure data scientists and application analysts can confidently create production workflows, as necessary.

What use cases can be fully investigated with data of high quality?

Normalized reusable clean data can contribute to many stages of the research cycle. With this type of data, you can theoretically use it for any use case. Our passion is making your data work harder for you and enabling your organization to investigate any potential use case for that data. If you would like to hear about how specifically SciBite’s platform can help in the example use cases mentioned below, please contact us.

- Progression Free Survival and Disease Progression/Remission

- Readmission Metrics

- Nursing Impact Metrics

- Intervention/Drug Impact (HEOR)

- Study Recruitment and Clinical Trial Efficiency

- Gene-Indication Relationships

- Assay Registration

- CAR T Therapy

Connect with SciBite

Connect with SciBite today to hear more about how our stack can help your organization normalize your data and overcome your healthcare digital transformation challenges. By taking our lessons learned from our successful collaborations, like our close collaboration with City of Hope, we can help you unlock the full power of your data.

About Arvind Swaminathan

Technical Consultant, SciBite

Arvind Swaminathan, Technical Consultant. He is passionate in helping organizations overcome their digital transformation challenges to enable data discovery and research. Over his professional career, first at Epic Systems, Arvind has worked in the healthcare space to help clean and aggregate data for research and commercial use. He has been with SciBite since 2022.

Other articles by Arvind

1. [Blog] Unlocking Important RWE from Patient Data – Why and How? read more.

Related articles

-

Unlocking important RWE from patient data (Part 1) – Why and how?

In this three-part blog series, we explore the challenges healthcare organizations face in unlocking patient data for real-world evidence. In part 1 Unlocking Important Real World Evidence (RWE) from Patient Data – Why and How?

Read -

Delivery of precision medicine through alignment of clinical data to ontologies

Precision medicine is changing the way that we think about the treatment of disease, moving from broad-acting therapies to therapies tailored to the individual patient. This increasingly relies on real-world data (RWD), encompassing a diverse range of sources, spanning multi-omic molecular characterisation of the patient’s condition, clinical presentation, treatment, and broader medical histories.

Read

How could the SciBite semantic platform help you?

Get in touch with us to find out how we can transform your data

© Copyright © 2024 Elsevier Ltd., its licensors, and contributors. All rights are reserved, including those for text and data mining, AI training, and similar technologies.